App Actions on Enterprise Devices (Part 1)

This is the first of two blogs exploring whether the newly announced Shortcuts feature of App Actions will work well on Enterprise devices. This blog considers built-in Intents and the second part will deal with custom Intents. A sample app to accompany this series is available at https://github.com/darryncampbell/App-Actions-Demo/

Google IO 2021 announced the beta release of the App Actions using the Android Shortcuts framework. This is an enhancement to the existing Google Assistant integration that used actions.xml. The announcement centred around using Built-in Intents, first introduced in 2020 and the new Custom Intents.

I noticed that one of the built-in Intents was "Get Barcode" so I thought it made sense to experiment with App Actions on a Zebra device, especially since the IO announcement included one of our major retail customers.

I have previously written about:

- How you can add voice recognition to your app using Google DialogFlow

- Using DataWedge to respond to voice commands offline and

- Upgrading to V2 of the DialogFlow engine.

App Actions appear to achieve the same use cases (responding to user requests) without integrating with a backend server.

Setup

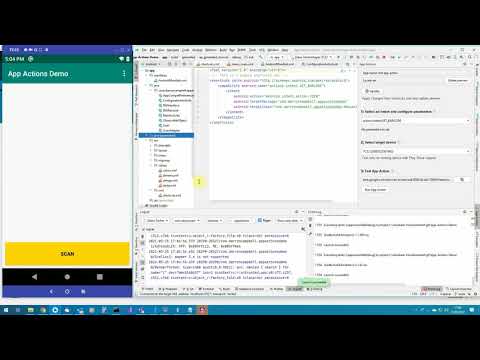

A new Code Lab for App Actions with Shortcuts was released alongside Google IO 2021 so I followed that. There is also a webinar. This code lab covers the basic setup & prerequisites such as uploading your app to an Internal Play Store test track, signing in with the same Google account everywhere and configuring the (very Alpha) Assistant test tool for Android Studio

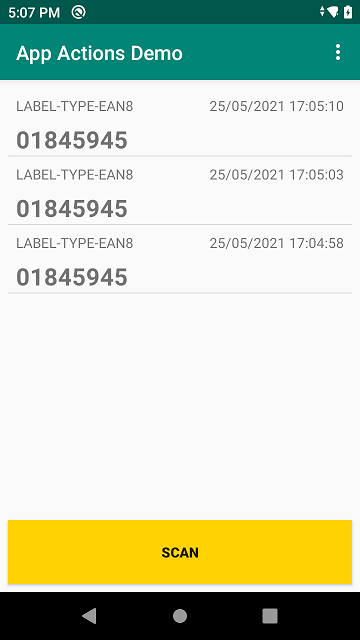

I previously wrote an application to demonstrate barcode capture on Zebra Android devices using Kotlin, which I used as a base app to add App Actions to.

I am testing with a Zebra TC52 running Android 10, though any Zebra Android device running Lollipop (for App Actions) and DataWedge 6.4 or higher should work. I also installed the separate Assistant app from the Play Store to provide a better user experience but this was not necessary.

Built-In Intent

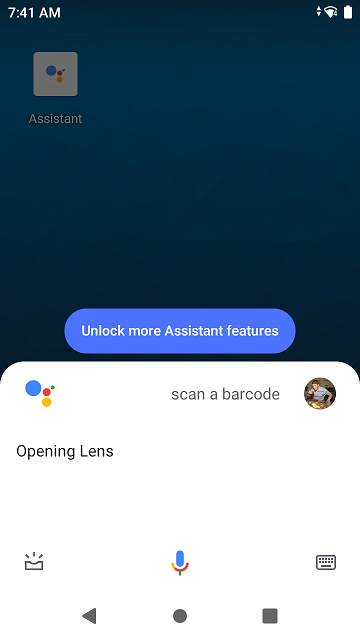

Did you know, if you ask Google assistant to "Scan a Barcode" it will bring up Google Lens?

There is obviously a better way to scan barcodes on Zebra devices and you can add additional capabilities to the Assistant by implementing one of the built-in Intents; built-in Intents use pre-defined machine learning models on the device to recognise a user's intention, for example Get Barcode will be recognised when the user says something like:

- Scan to pay on Example App

- Example App scan barcode

In order to implement Get Barcode into my existing app, I needed to make the following changes:

Create a shortcuts.xml file and define a built-in Intent capability. I just declared an explicit Intent to be sent to my application to fulfill the capability but the mechanism is very flexible and more detail is available in the schema

<capability android:name="actions.intent.GET_BARCODE">

<intent

android:action="android.intent.action.VIEW"

android:targetPackage="com.darryncampbell.appactionsdemo"

android:targetClass="com.darryncampbell.appactionsdemo.MainActivity">

</intent>

</capability>

I only defined a single shortcut that would invoke the 'GET BARCODE' capability. These are the kind of application capabilities that your app would surface to the Google assistant so it can in turn surface these to your end users:

<shortcut

android:shortcutId="PRICE_LOOKUP"

android:shortcutShortLabel="@string/pluShort">

<capability-binding android:key="actions.intent.GET_BARCODE">

</capability-binding>

</shortcut>

You also need to point to your shortcuts.xml file in your Android manifest

<meta-data

android:name="android.app.shortcuts"

android:resource="@xml/shortcuts" />

When the Google assistant recognises that 'GET BARCODE' desire, an explicit Android Intent is sent to the sample application. Because 'GET BARCODE' does not have any associated parameters there is no easy way to differentiate the Intent from other Intents (such as barcode scans). In a production app, the recommendation is to segregate these capability handlers into separate modules of your app but for this demo, I just differentiate from other Intents by the presence of the "token", which is present in all Assistants calls.

fun handleIntent(intent: Intent)

{

...

else if (intent.hasExtra("actions.fulfillment.extra.ACTION_TOKEN"))

{

// GET_BARCODE Intent entry point (App Actions)

dwInterface.sendCommandString(applicationContext,

DWInterface.DATAWEDGE_SEND_SET_SOFT_SCAN, "START_SCANNING")

}

}

Another consideration for applications that use DataWedge is to be aware that the Google Assistant may now pop-up on top of your app and send it to the background. To avoid DataWedge switching profiles (disabling the scanner & potentially introducing delays), then add the Google assistant to your associated apps when you define the DataWedge profile. Obviously take care that there are not multiple apps all adding the Assistant to their DW profile.

private fun createDataWedgeProfile() {

...

val appConfig = Bundle()

appConfig.putString("PACKAGE_NAME", packageName)

appConfig.putStringArray("ACTIVITY_LIST", arrayOf("*"))

val appConfigAssistant = Bundle()

appConfigAssistant.putString("PACKAGE_NAME", "com.google.android.googlequicksearchbox") // Assistant

appConfigAssistant.putStringArray("ACTIVITY_LIST", arrayOf("*"))

profileConfig.putParcelableArray("APP_LIST", arrayOf(appConfig, appConfigAssistant))

...

}

Testing the Built-In Intent

The 'GET BARCODE' functionality can now be tested. Google recommends using the Google Assistant plugin in Android Studio to simulate the Assistant invoking the capability and you can see that in the video below:

Ideally, I would like to show this running on a real device and responding to a real voice activation, "OK Google, Scan a Barcode with the App Actions Demo". On-device testing with the Assistant is not that simple and the app must first be approved according to the Play Store Policy Asssitant review process.

A few things to bear in mind when publishing your app:

- When you upload your application you will need to define a privacy policy and accept the Google Actions terms & conditions under the advanced settings.

- In my experience, this review process is manual. Obviously manual review processes take longer and demo apps are less likely to be approved.

- The review process for Assistant actions does not hold up the overall review process for the app.

Using the sample app:

The way the sample app is written, a scan with the hardware trigger is indistinguishable from a scan invoked with 'GET BARCODE' voice capability

Part Two

Part two (coming soon) will explore custom Intents as well as summarize whether App Actions are well suited to Enterprise Devices

Darryn Campbell